Picture you’re running a busy boutique. You’ve just updated your storefront display and can’t decide which sign draws in more shoppers. So, you alternate between two window signs and count how many people step inside with each version. That’s A/B testing.

But incrementality testing asks a different question: how many of those shoppers would have come in anyway? Maybe it’s payday, or there’s a street fair that’s boosting foot traffic. Incrementality measures the added lift of your display.

If you want to know how incrementality experiments are different from a/b experiments, we got you covered. Inside, you’ll learn what is an a/b test, what is an incrementality test, when to use which methodology, and much more.

A/B testing, or split testing, compares two versions of a marketing asset (more examples of such assets below) to see which performs better. The audience is randomly split into groups, and each group sees a different creative variant.

By changing one variable at a time — such as a headline, image, or call-to-action — marketers can isolate what drives better results. In short, A/B testing shows which version performs best, giving you data-driven confidence in their creative decisions.

Now, the storefront example paints a clear picture, but in digital marketing and ecommerce, A/B testing methodologies usually happen online.

Let’s say you’re the marketing manager at BrightCraft Homewares, a DTC brand for modern kitchenware. The team is preparing to launch a new line of eco-friendly cookware and wants to know which ad concept will drive more conversions.

They create two versions of a Meta campaign:

Both ads run to the same audience with identical budgets for two weeks. Version B wins, delivering a 27% higher click-through rate and a 15% boost in conversions.

Armed with that data, BrightCraft scales the winning creative across Meta and email, improving efficiency and cutting acquisition costs by 18% the following month.

Much like the Meta ad example above, A/B testing works well in certain use cases. Here are a few of them:

Next up: what is incrementality testing?

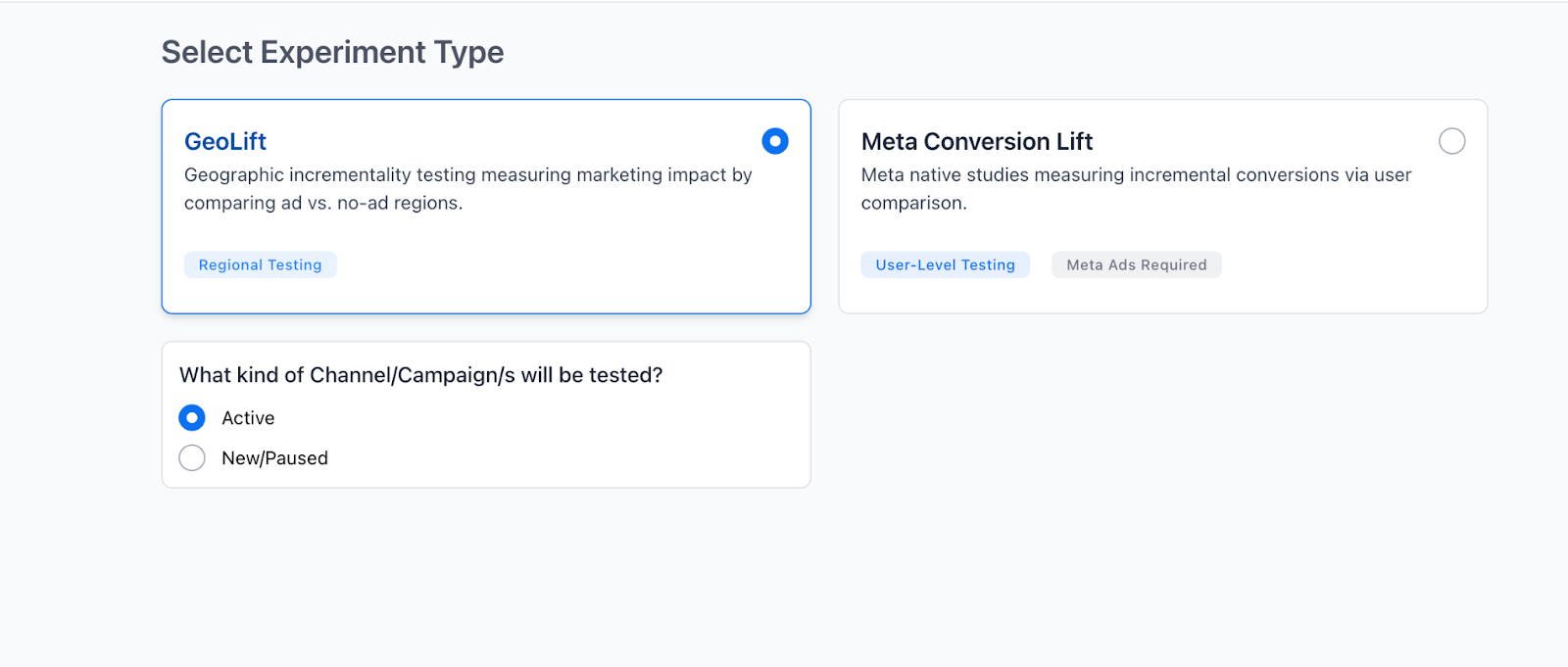

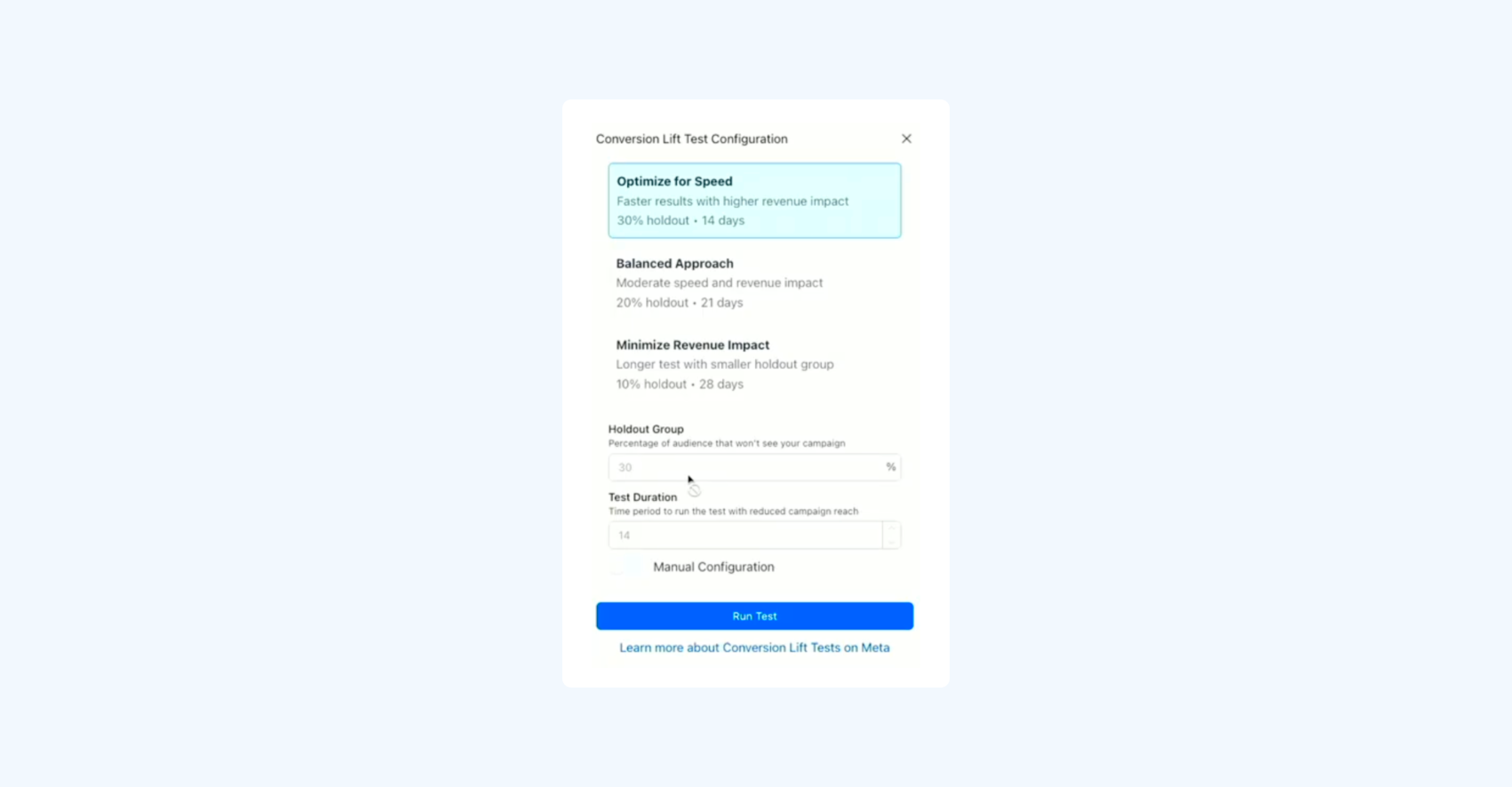

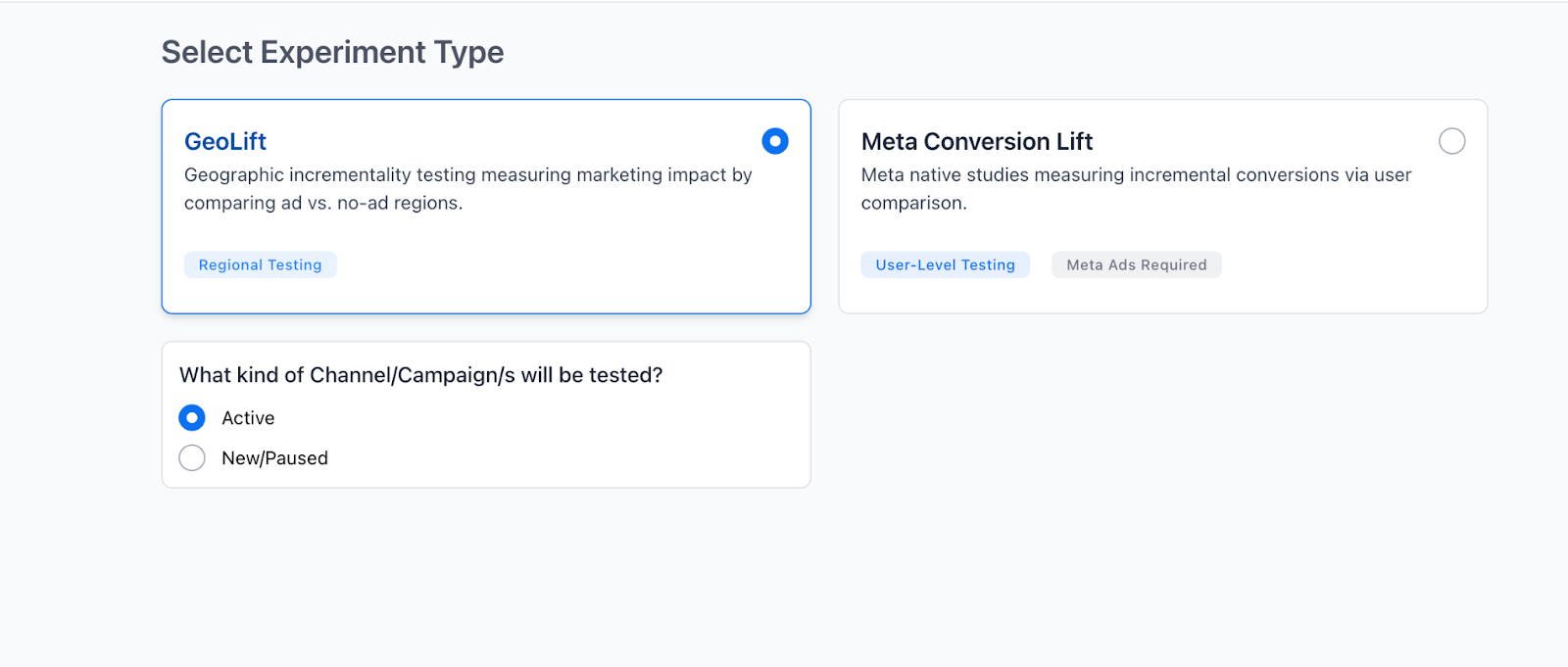

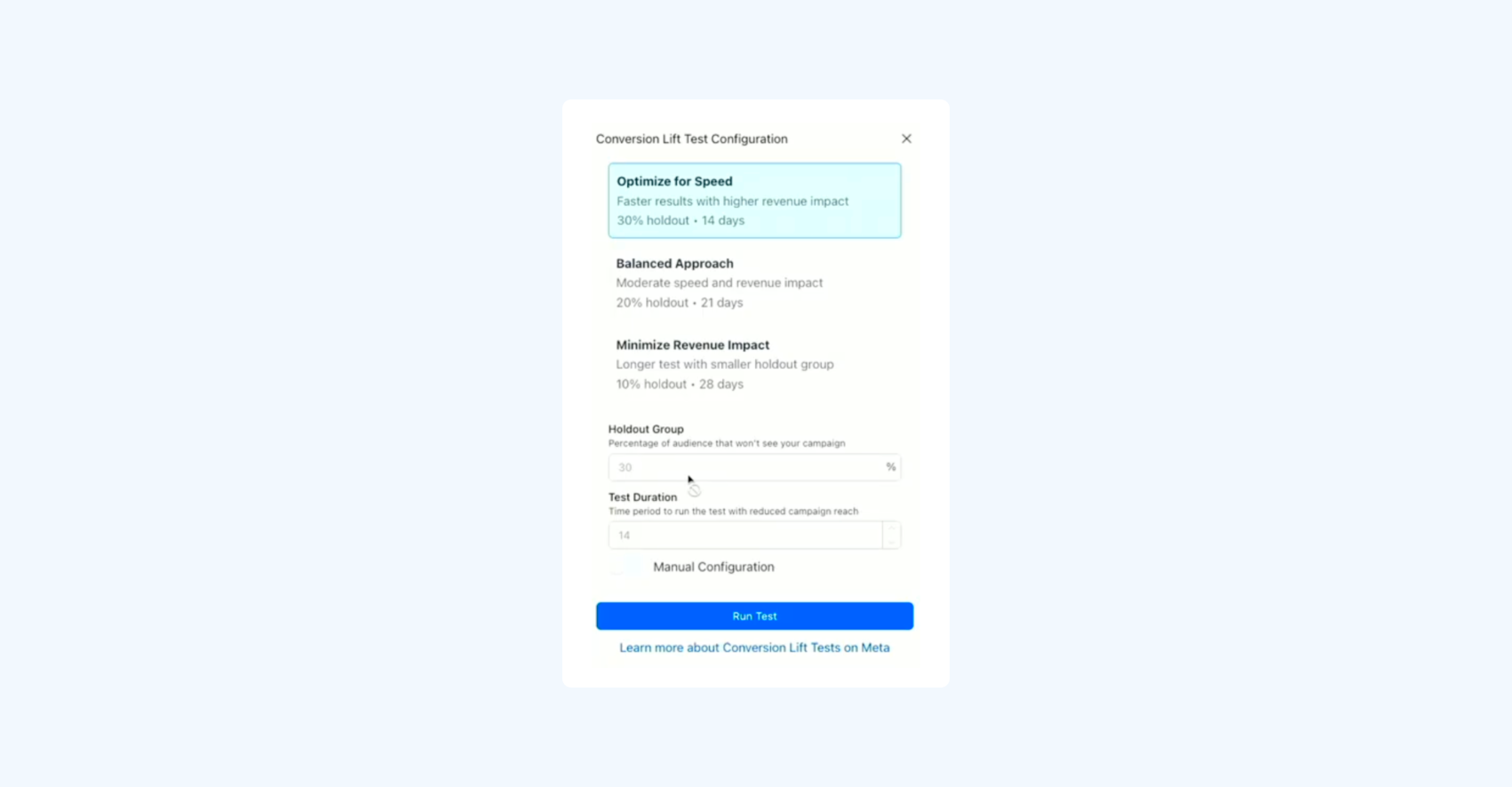

There are different types of incrementality tests. Triple Whale, for example, offers GeoLift and Meta Conversion Lift experiments.

These experiments measure how much of your marketing performance was actually caused by your campaigns. Instead of comparing two versions of an asset, it compares two groups of people or regions — one exposed to your ads and one not — to determine the causal lift, or the true lift, driven by your marketing.

By isolating this effect, marketers can see how many sales, sign-ups, or visits wouldn’t have happened without their campaign. In short, incrementality testing reveals the real impact of your marketing, separating coincidence from true influence.

Let’s take you back to BrightCraft Homewares. Now, instead of testing which ad works best, the team wanted to know how much new customer revenue their Meta ads were actually driving.

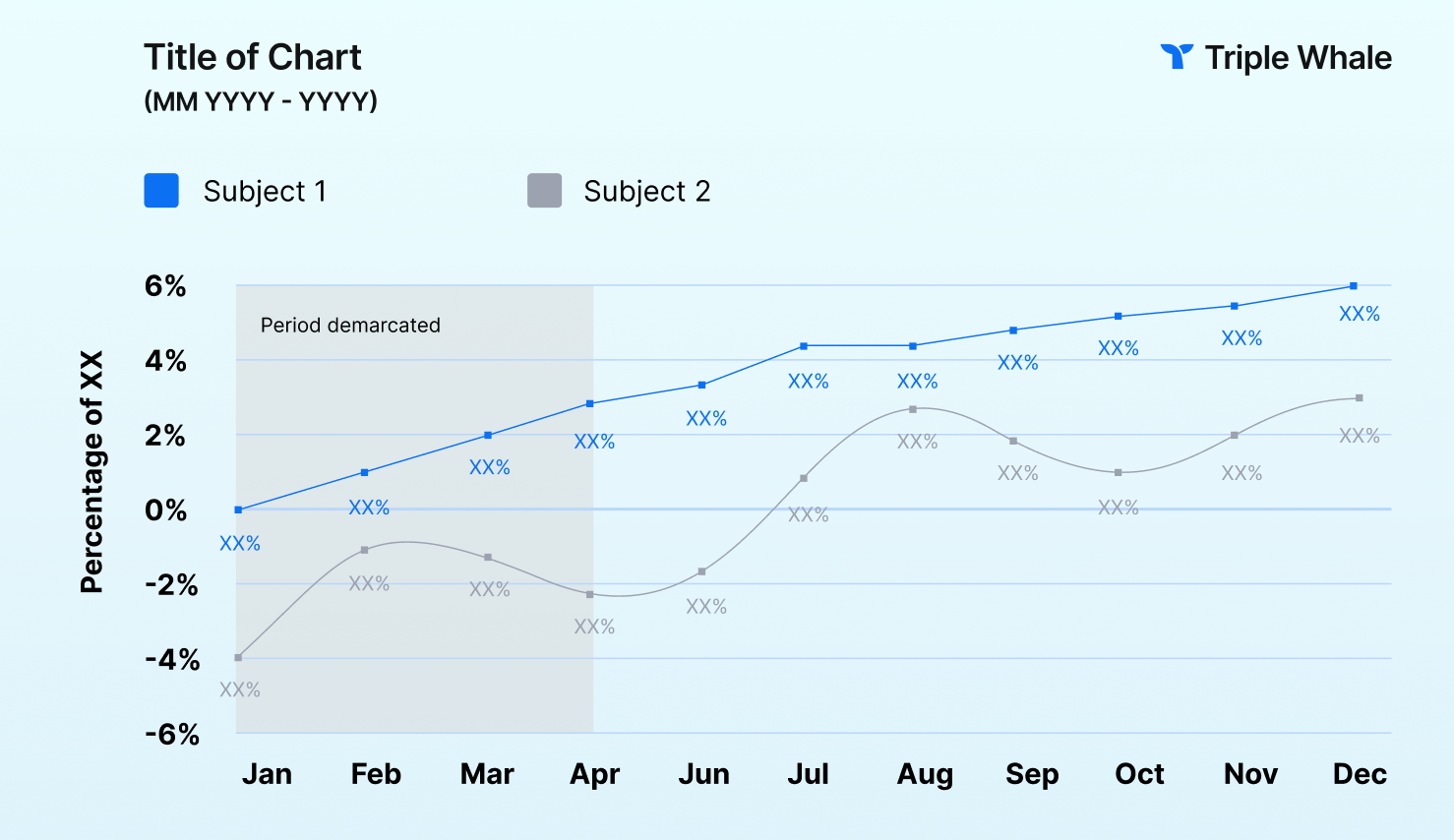

Instead of comparing ad versions, you used a GeoLift test. This is a type of incrementality testing that measures impact across different geographic regions.

Here’s how it worked: BrightCraft’s marketing team selected “new customer revenue” as the primary metric. Using their analytics tool, they split the U.S. into two regions.

They then randomly assigned half the test group, where Meta ads would continue running normally, and the other half as the control group, where they paused all Meta campaigns.

The tool automatically recommended how long to run the experiment (four weeks) and how many regions to include in each group, based on BrightCraft’s expected incremental ROAS (iROAS).

In this case, the team estimated a modest iROAS of 2.5, informed by historical campaign performance.

Once the test concluded, the results were clear:

While not groundbreaking, the results gave BrightCraft confidence that their Meta spend was indeed contributing meaningful incremental growth — not just retargeting customers who would have purchased anyway.

Whether someone would purchase if they saw an ad or not is a great use case. Here are a few other great times to run an incrementality test:

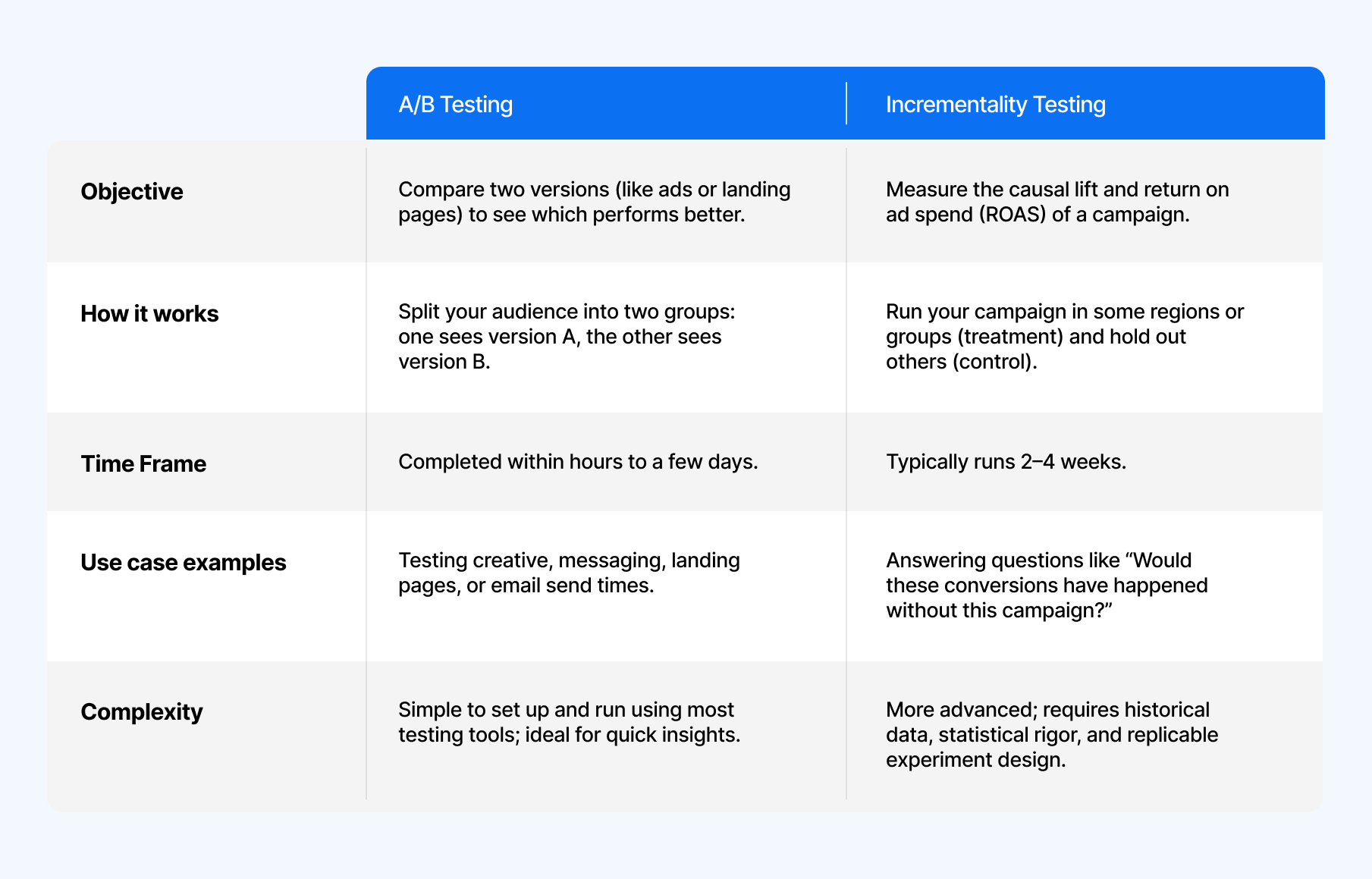

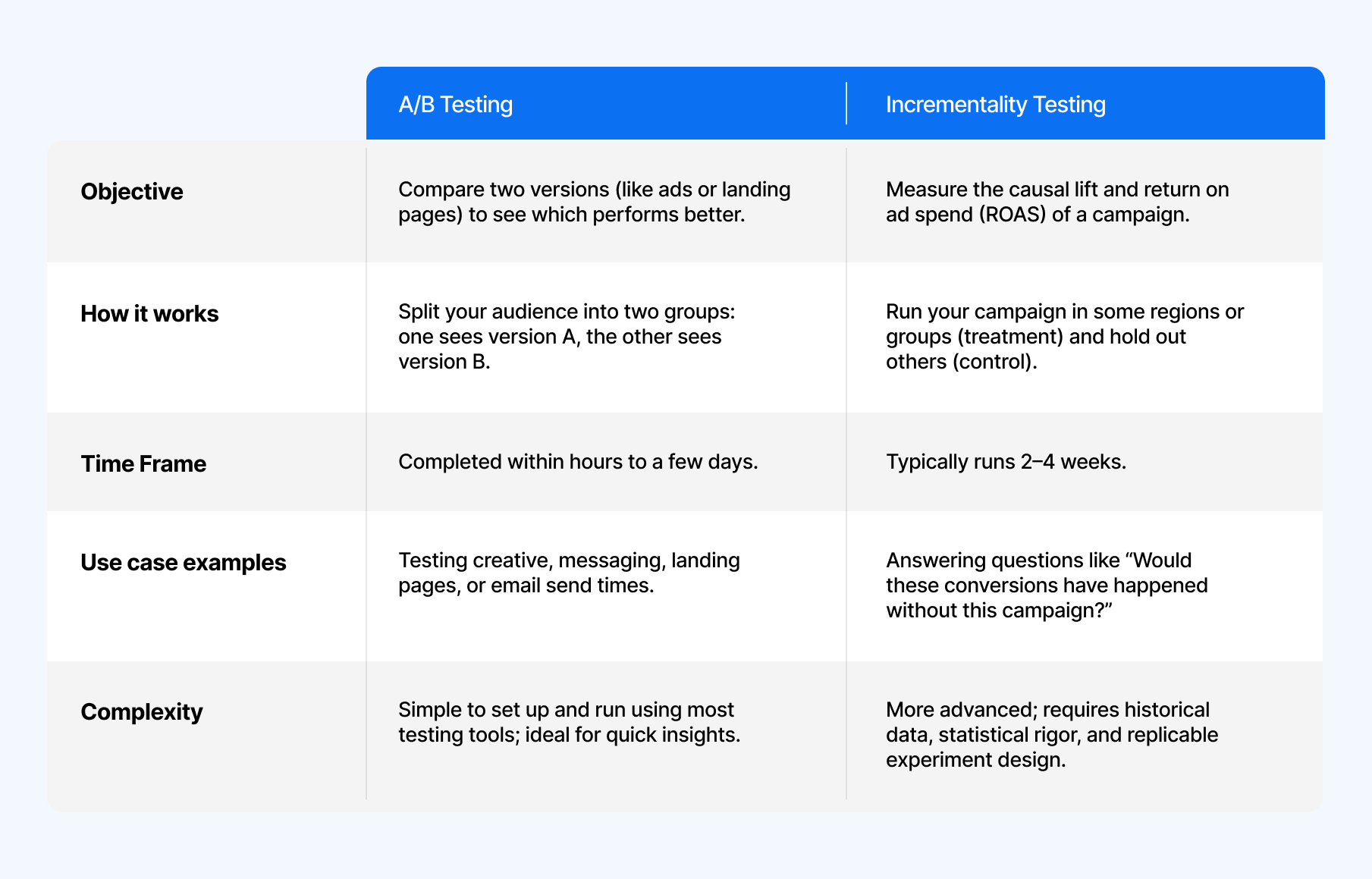

If you’re wondering in what way incrementality experiments differ from a/b experiments, here’s a quick summary.

While randomized controlled trial vs a/b testing both involve splitting audiences to compare outcomes, what they’re measuring is very different. A/B testing involves optimization to compare two versions of something.

Incrementality testing, on the other hand, zooms out. Instead of asking which version works best, it asks did this marketing effort make a difference at all?

If you jump straight into A/B testing, you might end up optimizing creative in a channel that isn’t actually driving incremental results. That’s why it’s smart to start by asking: Is this channel even moving the needle?

So when should you use each method?

Because incrementality testing is more complex, it’s best suited for larger brands spending millions annually across multiple channels. It reveals the causal impact of your marketing so you can confidently invest where it matters most.

And why is a/b testing important? For smaller brands (or those without the data depth, time, or budget) A/B testing is a great place for quick wins. It’s accessible, and helps you measure what’s resonating while you build toward deeper, more comprehensive measurement.

It’s not all this versus that when it comes to these two marketing measurements. Sometimes, they can work in harmony to bring your brand more powerful insights.

You can use incrementality testing to confirm whether a campaign or channel truly drives new value, then layer in A/B testing to refine the creative, audience, or messaging within that proven channel.

For example:

As your marketing mix grows, you’ll need both macro-level proof of impact and micro-level insights on performance details. Together, A/B testing and incrementality testing give you exactly that.

Both incrementality and A/B testing methodologies give marketers powerful lenses for understanding performance. A/B testing helps you fine-tune what works best within a campaign, while incrementality testing uncovers whether that campaign is actually driving new growth in the first place.

With Triple Whale’s Unified Measurement, you don’t have to settle for bits and pieces of the puzzle. Bring MMM, MTA, and Incrementality Testing together in one platform to see the full story behind every conversion.

Ready to test what’s truly working in your marketing? Schedule a demo today.

Picture you’re running a busy boutique. You’ve just updated your storefront display and can’t decide which sign draws in more shoppers. So, you alternate between two window signs and count how many people step inside with each version. That’s A/B testing.

But incrementality testing asks a different question: how many of those shoppers would have come in anyway? Maybe it’s payday, or there’s a street fair that’s boosting foot traffic. Incrementality measures the added lift of your display.

If you want to know how incrementality experiments are different from a/b experiments, we got you covered. Inside, you’ll learn what is an a/b test, what is an incrementality test, when to use which methodology, and much more.

A/B testing, or split testing, compares two versions of a marketing asset (more examples of such assets below) to see which performs better. The audience is randomly split into groups, and each group sees a different creative variant.

By changing one variable at a time — such as a headline, image, or call-to-action — marketers can isolate what drives better results. In short, A/B testing shows which version performs best, giving you data-driven confidence in their creative decisions.

Now, the storefront example paints a clear picture, but in digital marketing and ecommerce, A/B testing methodologies usually happen online.

Let’s say you’re the marketing manager at BrightCraft Homewares, a DTC brand for modern kitchenware. The team is preparing to launch a new line of eco-friendly cookware and wants to know which ad concept will drive more conversions.

They create two versions of a Meta campaign:

Both ads run to the same audience with identical budgets for two weeks. Version B wins, delivering a 27% higher click-through rate and a 15% boost in conversions.

Armed with that data, BrightCraft scales the winning creative across Meta and email, improving efficiency and cutting acquisition costs by 18% the following month.

Much like the Meta ad example above, A/B testing works well in certain use cases. Here are a few of them:

Next up: what is incrementality testing?

There are different types of incrementality tests. Triple Whale, for example, offers GeoLift and Meta Conversion Lift experiments.

These experiments measure how much of your marketing performance was actually caused by your campaigns. Instead of comparing two versions of an asset, it compares two groups of people or regions — one exposed to your ads and one not — to determine the causal lift, or the true lift, driven by your marketing.

By isolating this effect, marketers can see how many sales, sign-ups, or visits wouldn’t have happened without their campaign. In short, incrementality testing reveals the real impact of your marketing, separating coincidence from true influence.

Let’s take you back to BrightCraft Homewares. Now, instead of testing which ad works best, the team wanted to know how much new customer revenue their Meta ads were actually driving.

Instead of comparing ad versions, you used a GeoLift test. This is a type of incrementality testing that measures impact across different geographic regions.

Here’s how it worked: BrightCraft’s marketing team selected “new customer revenue” as the primary metric. Using their analytics tool, they split the U.S. into two regions.

They then randomly assigned half the test group, where Meta ads would continue running normally, and the other half as the control group, where they paused all Meta campaigns.

The tool automatically recommended how long to run the experiment (four weeks) and how many regions to include in each group, based on BrightCraft’s expected incremental ROAS (iROAS).

In this case, the team estimated a modest iROAS of 2.5, informed by historical campaign performance.

Once the test concluded, the results were clear:

While not groundbreaking, the results gave BrightCraft confidence that their Meta spend was indeed contributing meaningful incremental growth — not just retargeting customers who would have purchased anyway.

Whether someone would purchase if they saw an ad or not is a great use case. Here are a few other great times to run an incrementality test:

If you’re wondering in what way incrementality experiments differ from a/b experiments, here’s a quick summary.

While randomized controlled trial vs a/b testing both involve splitting audiences to compare outcomes, what they’re measuring is very different. A/B testing involves optimization to compare two versions of something.

Incrementality testing, on the other hand, zooms out. Instead of asking which version works best, it asks did this marketing effort make a difference at all?

If you jump straight into A/B testing, you might end up optimizing creative in a channel that isn’t actually driving incremental results. That’s why it’s smart to start by asking: Is this channel even moving the needle?

So when should you use each method?

Because incrementality testing is more complex, it’s best suited for larger brands spending millions annually across multiple channels. It reveals the causal impact of your marketing so you can confidently invest where it matters most.

And why is a/b testing important? For smaller brands (or those without the data depth, time, or budget) A/B testing is a great place for quick wins. It’s accessible, and helps you measure what’s resonating while you build toward deeper, more comprehensive measurement.

It’s not all this versus that when it comes to these two marketing measurements. Sometimes, they can work in harmony to bring your brand more powerful insights.

You can use incrementality testing to confirm whether a campaign or channel truly drives new value, then layer in A/B testing to refine the creative, audience, or messaging within that proven channel.

For example:

As your marketing mix grows, you’ll need both macro-level proof of impact and micro-level insights on performance details. Together, A/B testing and incrementality testing give you exactly that.

Both incrementality and A/B testing methodologies give marketers powerful lenses for understanding performance. A/B testing helps you fine-tune what works best within a campaign, while incrementality testing uncovers whether that campaign is actually driving new growth in the first place.

With Triple Whale’s Unified Measurement, you don’t have to settle for bits and pieces of the puzzle. Bring MMM, MTA, and Incrementality Testing together in one platform to see the full story behind every conversion.

Ready to test what’s truly working in your marketing? Schedule a demo today.

Body Copy: The following benchmarks compare advertising metrics from April 1-17 to the previous period. Considering President Trump first unveiled his tariffs on April 2, the timing corresponds with potential changes in advertising behavior among ecommerce brands (though it isn’t necessarily correlated).